Research Projects

Current Projects

CAREER: Partitive Solid Geometry for Computer-Aided Design: Principles, Algorithms, Workflows, & Applications

Program: Engineering Design and Systems Engineering

Sponsor: National Science Foundation

Duration: August 2021 - July 2026

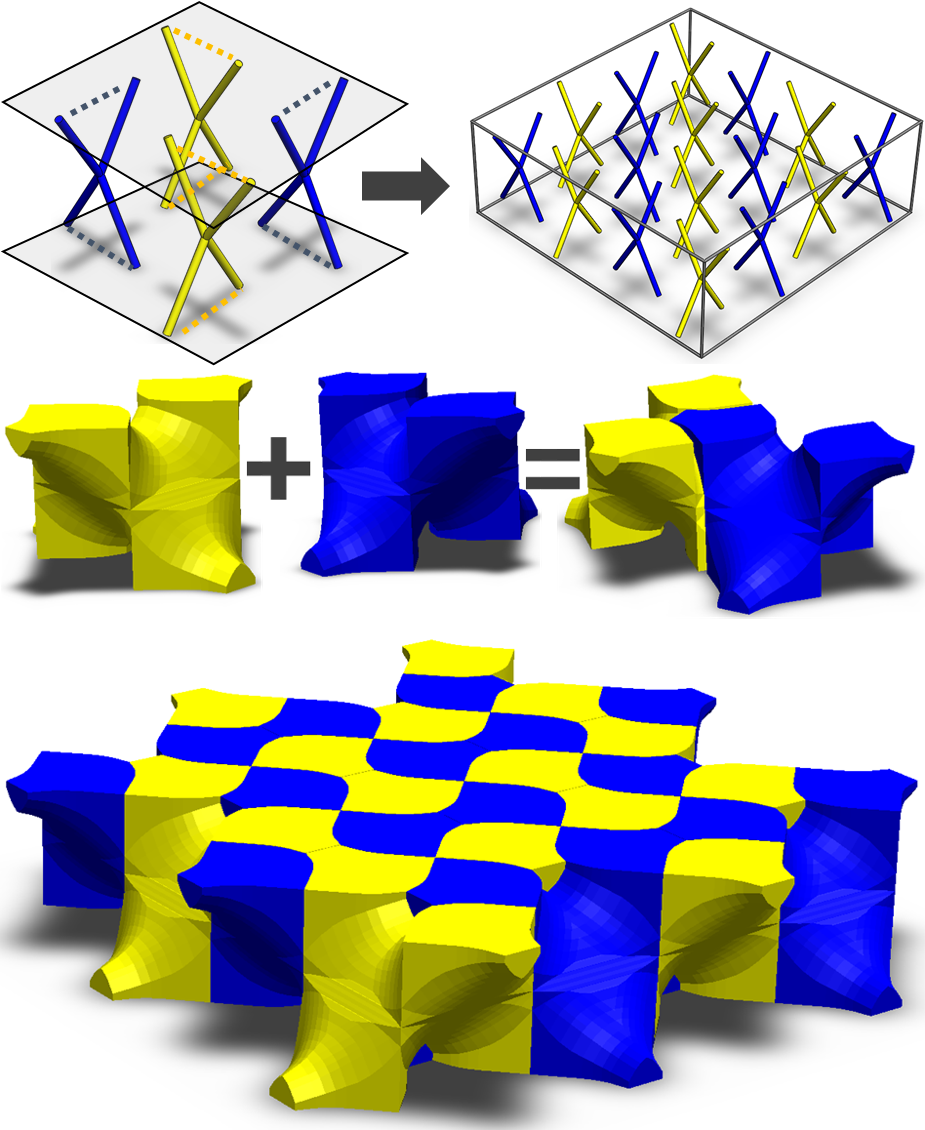

This Faculty Early Career Development Program (CAREER) grant will establish the foundations of a new geometric modeling framework – partitive solid geometry – for the design of complex two- and three-dimensional geometric patterns. Modeling complex patterns, such as cellular structures, is intrinsic to several areas of national interest, including consumer products, protective gear for sports and the military, and curved miniaturized electronics. Making complex geometric modeling accessible to more designers and engineers is key to facilitating disruptive innovations in many engineering disciplines, including the automotive, aerospace, construction, additive manufacturing, mechanics, thermo-fluids, and acoustics fields. Current tools for generative design and modeling are highly automated, which makes it difficult for designers to explore new ideas and ask what-if questions. A new representation of geometric solids is needed that will allow designers to apply their expertise, ideas, and creativity in designing cellular structures.

This research will introduce space-filling shapes as the underlying novel shape representation for partitive solid geometry, which will enable forward design workflows for the creation of complex shapes and structures for generative and procedural design. Such a representation should also simultaneously support computationally efficient mechanical evaluation, such as finite element simulation. This research will make complex geometric modeling available to all, real-time and intuitive to interact with, useful for serious engineering design, and usable for recreational learning. Efficient and robust algorithms will be developed and embedded within interactive software workflows that will allow users to directly and intuitively create shapes that were not possible before. A hypothesis-driven approach will be taken to investigate partitive solid geometry for designing a wide variety of interlocking shapes, functionally graded cellular structures, and auxetic materials. The tools resulting from this work will enable novice and expert designers to creatively apply their knowledge in applications ranging from the design of new materials to new architectural forms and safe products. This grant will further enable a new type of learning mechanism, where younger audiences will be able to easily generate complex shapes, prototype them as puzzles through 3D printing, and play with the puzzles to discover basic principles of geometry. This will fundamentally transform the way children develop their spatial reasoning ability through hands-on design and prototyping activities.

DARES - Distributed Autonomous Robotic Experiments and Simulations

Collaborators: Zohaib Hasnain - Mechanical Engineering, Texas A&M University

Sponsor: Army Research Office, Department of Defense

Duration: March 2021 - February 2026

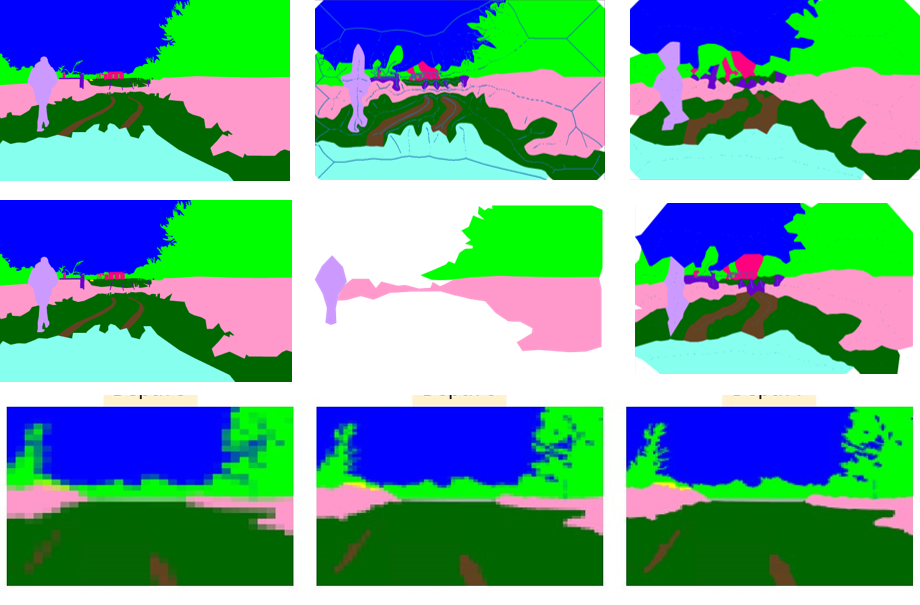

Modern interactive autonomous robotic agents require the ability to understand and adapt to the world around them. This includes the ability to represent and reason about the environment they operate in as well as other agents they interact with. Existing autonomous systems handle this through either directly dealing with fusion and comprehension of multi-sensory information from environmental sensors, or, more practically, via understanding and reacting to a reduced form of this data. Additionally, when such a system needs to share information over both short and long distances, it must account for low bandwidth and intermittent connections. The objective of this research is to develop geometric representations of environment that (a) are compressible for transmission over lossy networks, (b) have the ability to represent different objects in a scene at different levels-of-detail, and (c) respect temporal variations in the scene as the vehicle moves. The project will utilize the RELLIS-3D dataset developed at TAMU to develop M-LOD modeling framework. The approach is based on the observation that the RGB and LiDAR representations in a multi-modal dataset offer different types of information. The central idea is to use an ontology-aware topological representation for M-LOD modeling of natural scenes. Based on user input, the proposed method should be able to generate a specific level-of-detail for identified semantic labels in a scene.

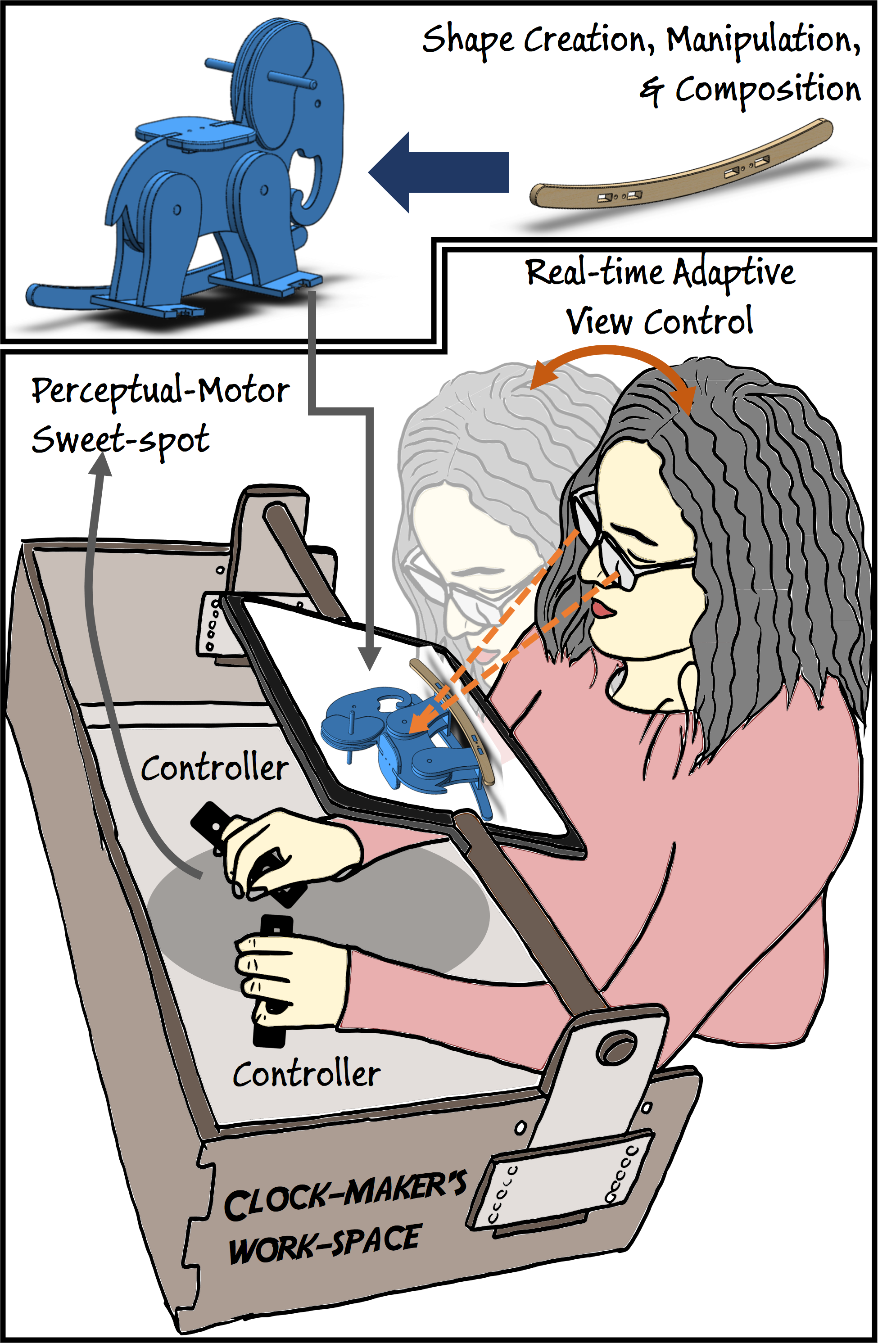

Creating a VR Workspace for Design by Physical Manipulation

Collaborators: Francis Quek - Visualization, Texas A&M University, Shinjiro Sueda - Computer Science, Texas A&M University

Program: Cyber-Human Systems

Sponsor: National Science Foundation

Duration: August 2020 - July 2023

From an early age, humans learn to see, touch, hold, manipulate, and alter their physical surroundings using both of their hands and tools. A sculptor holds clay in one hand and precisely carves out material to create an intricate work of art. A mechanic uses hands and tools to reach the most inaccessible nooks of a car?s engine to make repairs. All who work their hands, threading needles, carving soap sculptures, or wiring electrical wall sockets know the extraordinary dexterity and precision they possess when working in the space within the near reach of both hands. The project investigates a new class of augmented and virtual reality (AR/VR) environments that allow designers to be more creative in this workspace. Existing AR/VR systems only allow users to interact at an arm?s length making it impossible to leverage the innate human ability to use hands and tools for precise actions close to the body. As a result, the original promise of AR and VR in facilitating creative thinking and problem-solving is still unrealized. Towards this, a workspace is designed in this project to enable designers to create virtual designs in three-dimensional (3D) space by mirroring the intricate, complex, and precise actions that humans can perform in daily life.

Fostering Engineering Creativity and Communication through Immediate, Personalized Feedback on 2D-Perspective Drawing

Collaborators: Tracy Hammond - Computer Science, Texas A&M University, Julie Linsey, Wayne Li - Mechanical Engineering, Georgia Institute of Technology, Kerrie Douglas, Purdue University, Vimal Viswanathan, San Jose State University.

Program: Improving Undergraduate STEM Education: Education and Human Resources (IUSE: EHR)

Sponsor: National Science Foundation

Duration: July 2020 - June 2024

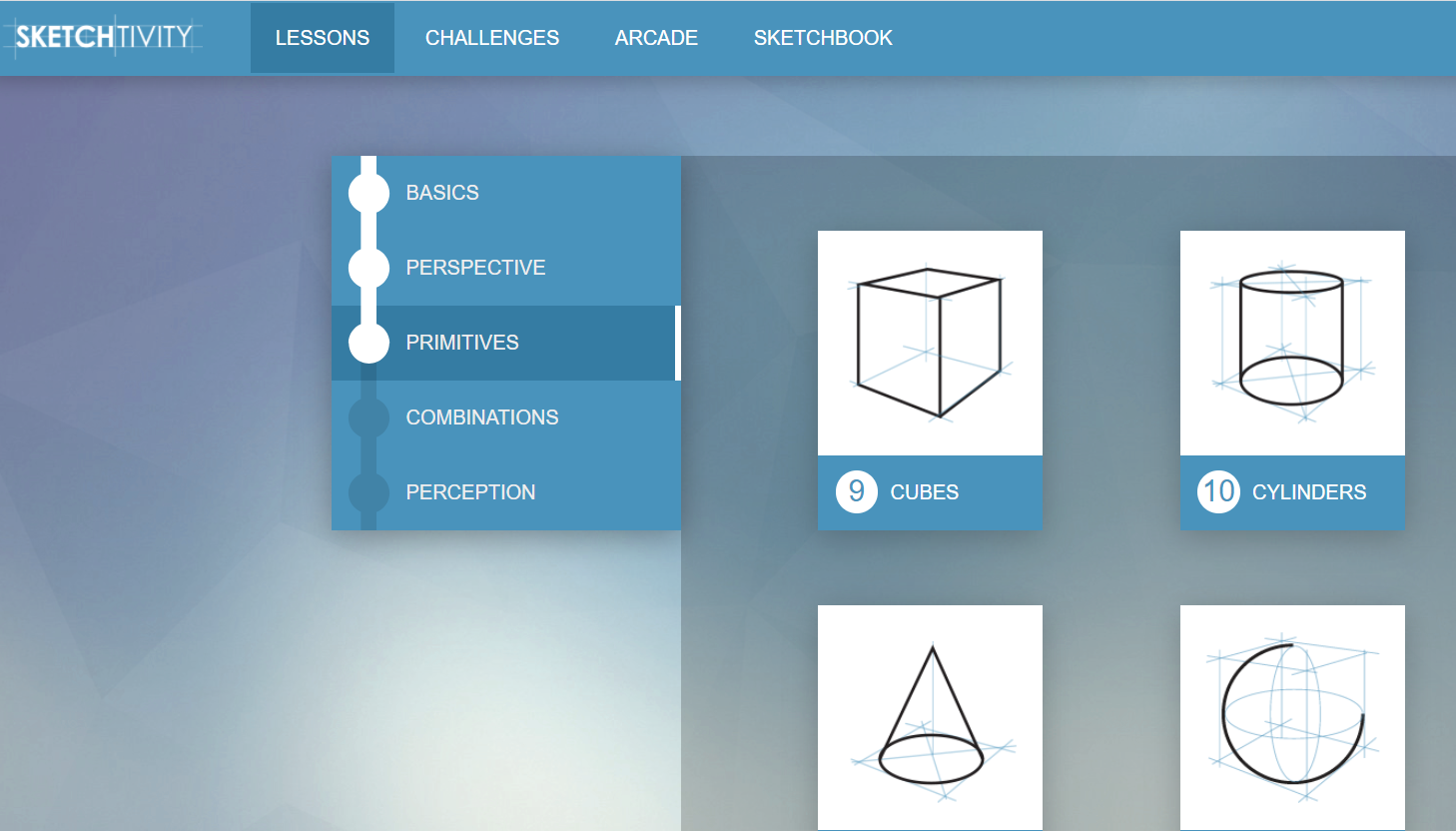

This project aims to serve the national interest in excellent undergraduate engineering education by improving students? ability to draw representations of structures and systems. Free-hand drawing is a crucial skill across engineering, especially for its ability to reduce complex systems to simplified, accurate diagrams. Such diagrams aid in idea generation, visualization of systems, and discussions between clients and engineers. When coupled with appropriate timely feedback, drawing can also be an effective learning tool for improving students? visual communication skills and creativity. This project will use a computer application called SketchTivity to teach engineering students how to draw, and examine the impact of drawing instruction on student learning. SketchTivity is an intelligent tutoring system that provides real-time feedback on 2D drawings that students make on a screen instead of on paper. The application, which was developed by the research team, provides each student with iterative, real-time, personalized feedback on the drawing, promoting improvements and facilitating learning. Free-hand drawing and its associated benefits were inadvertently removed from the engineering curricula when educators transitioned from hand drafting to Computer-Aided-Design. This transition has resulted in the lack of student and faculty proficiency in freehand drawing. This project will support restoration of 2D-perspective drawing to engineering curricula. Since drawing is a skill that is also relevant to other STEM fields, this work is likely to have broad relevance in undergraduate STEM education.

Completed Projects

Fracture Fixation Training using a Hybrid Simulator with Data Visualization

Collaborators: Bruce Tai - Mechanical Engineering, Texas A&M University

Program: Surgical Skills Simulation Training for Residents Research Grant

Sponsor: The Orthopaedic Research and Education Foundation (OREF) and The American Board of Orthopaedic Surgery (ABOS)

Duration: September 2019 - December 2021

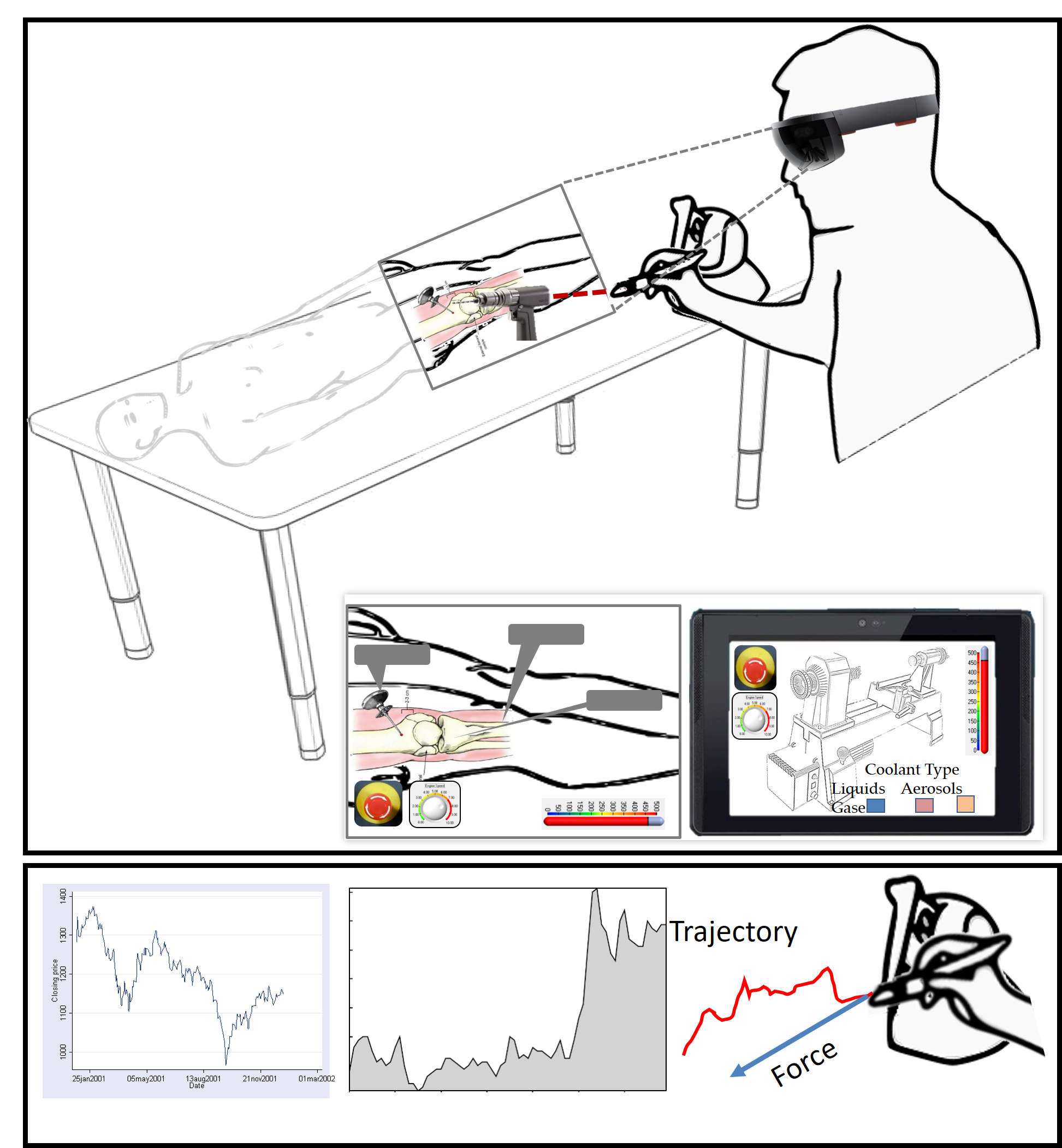

This project aims to develop and assess the utility of a simulation environment for training orthopaedic residents’ fracture fixation skills. Many different techniques have been proposed to improve resident training to both increase patient safety and reduce the time required for residents to become proficient with the basic skills of drilling into bone and placing bone screws. The proposed system is unique in that it will consist of both realistic physical models and visualization to provide feedback to both the operator and the instructor. Using 3D printing, the physical models allow us to replicate bones with varying geometries and material properties to provide the orthopaedic resident with a variety of common clinical scenarios to better simulate the resident’s experiences. Rare bone conditions or congenital deformities can be replicated in the 3D printed surrogates, or the surrogates could be swapped with cadaveric or animal specimens. This versatility allows for the full functionality of the feedback system to inform both the trainer and the trainee in settings with variable resources. We hypothesize that this proposed system will accomplish two goals: 1) it will improve proficiency in drilling and screw placement for orthopaedic residents compared to residents trained with traditional methods and 2) the real-time precise force, torque and position data will allow easy, accurate and objective assessment of trainees.

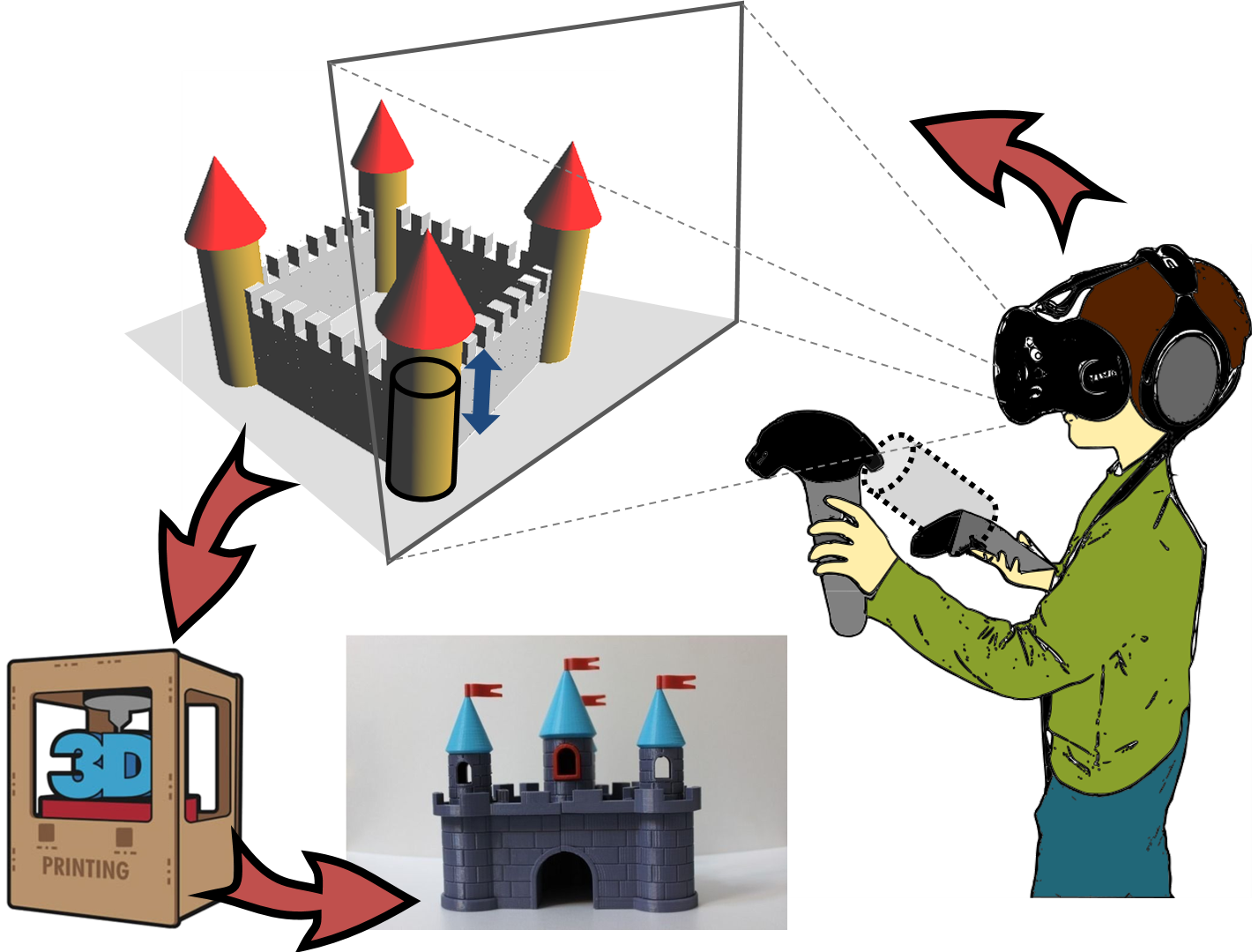

Tangible Augmented Reality Design Workflows for Digital-to-Physical Prototyping by Children

Collaborators: Francis Quek - Visualization, Texas A&M University, College Station, TX, Shinjiro Sueda - Computer Science, Texas A&M University, College Station, TX

Program: T3: Texas A& M Triads for Transformation

Sponsor: President’s Excellence Fund, Texas A&M University

Duration: May 2019 - May 2020

This project aims at enabling young students to experience three-dimensional geometric concepts in a tangible and immersive manner. Children, especially those in elementary school, have difficulty with reasoning about geometric concepts and spatial manipulation. Although these skills are essential for various STEM-related fields, three-dimensional (3D) concepts pertaining to parametric objects (e.g. spheres, cones) are currently taught only as mathematical abstractions. The idea behind this project is to embed tangibility and physicality of objects that a child can see from all directions and feel through physical touch. Humans learn to see, touch, hold, manipulate, and alter their physical surroundings using their hands from an early age. We are investigating augmented reality (AR) interfaces that are customized with a novel hand-held device for controlling parametric objects. The idea is to design a device that can serve as a universal controller for parametric modification of shapes. The shapes could be spheres, cylinders, cones, or cuboids with parameters such as radius, extrusion-depth, angles, or sides respectively. With such a system, a child will be able to use bi-manual (two-handed) manipulation to create, compose, modify, and control parametric geometric objects.

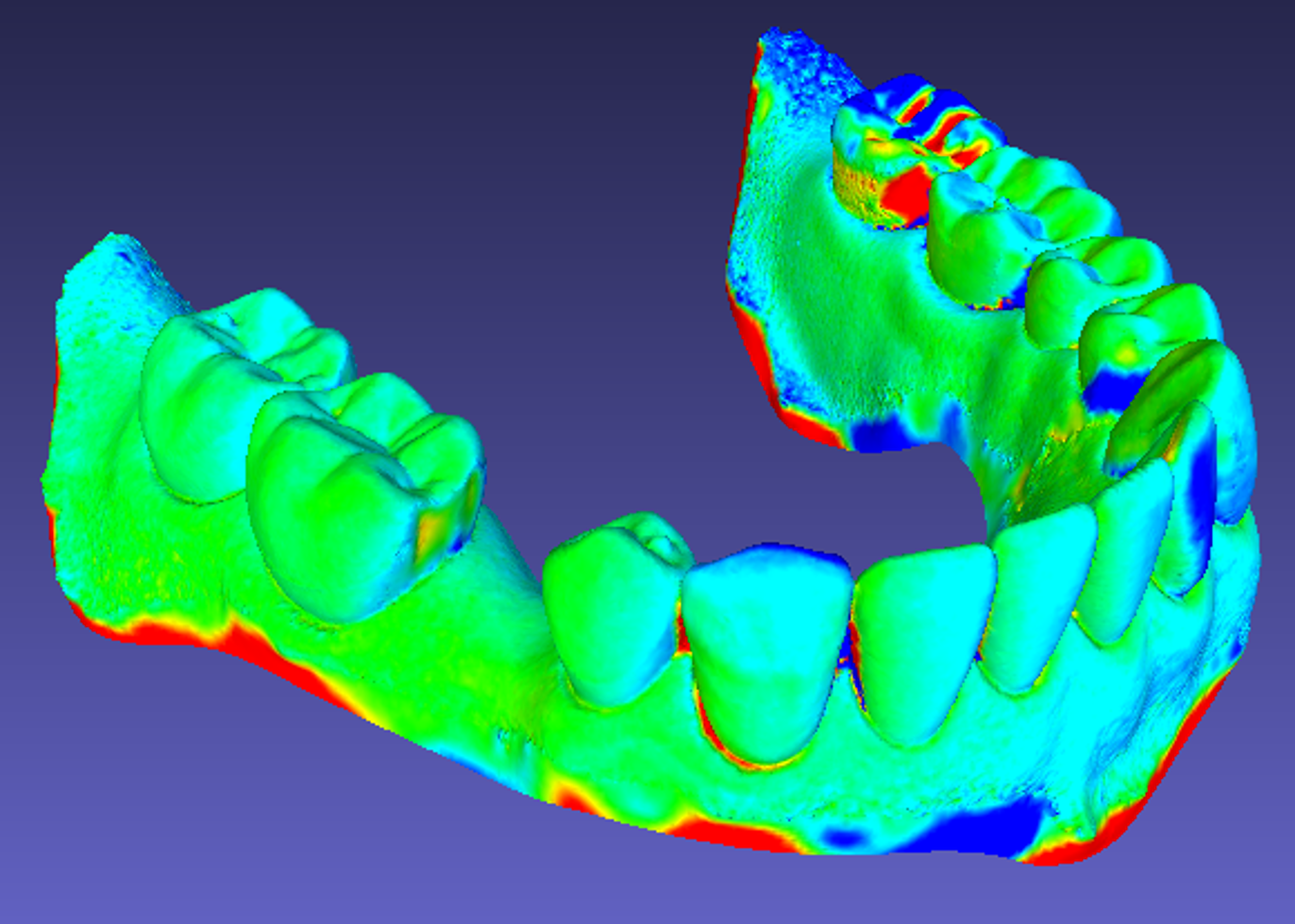

Influence of scanning light conditions on the accuracy (precision and trueness) and mesh quality of different intraoral digitizer systems.

Collaborators: Marta Revilla-León, Wenceslao Piedra, Amirali Zandinejad - College of Dentistry, Texas A&M University, Dallas, TX

Duration: June 2018 - December 2018

The goal was to measure the impact of various light conditions on the accuracy and mesh quality of four IOS systems: light of a dental chair, room light only, natural light and no light conditions when performing a digital impression. A typodont and a patient simulating bench were used. The mandibular typodont arch was digitized using a extraoral scanner (L1 Imetric scanner; Imetric) to obtain a STL file. Three IOSs were analyzed: IE (iTero Element; Cadent LTD), CO (Cerec Omnicam; Cerec-Sirona) and TS (Trios 3; 3Shape) at four different ambient light settings were evaluated: CL (chair light), RL (room light), NL (natural light) and ZL (no light). Ten digital impressions per group were made, for a total of 160 digital impressions. All intraoral scans were performed according to the manufacturer’s instructions by the same operator. The digitized STL file of the mandibular arch was used as a reference to measure the distortion between the digitized typodont and all the digital impressions performed, using 3D evaluation software (Geomagic Control X, 3D Systems).

Augmented Reality-Coupled Haptic System for Orthopaedic Surgery Training

Collaborators: Bruce Tai - Mechanical Engineering, Texas A&M University, College Station, TX, Mathew Kuttolamadom - Engineering Technology and Industrial Distribution, Texas A&M University, College Station, TX

Program: AggiE_Challenge 2017-2018

Sponsor: College of Engineering, Texas A&M University

Duration: September 2017 - May 2018

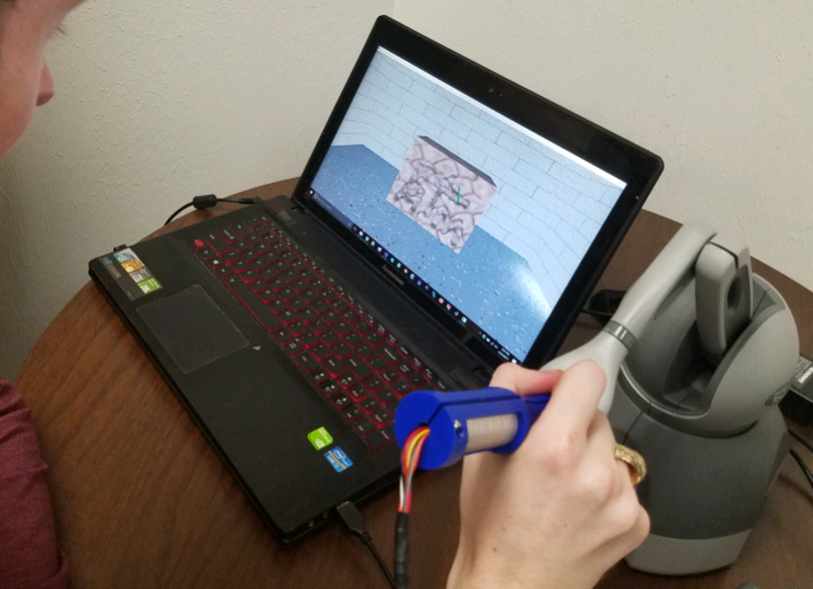

The goal of this project is to develop an augmented reality (AR) system coupled with a haptic device for manually operated machining operation training, such as drilling, burring, etc. These skills are not only needed in manufacturing shops but also very commonly in orthopaedic surgery, where the skill development is costly and time-consuming due to the required space, equipment, and personnel (attending surgeons). To accommodate the high demand on surgical training, the central idea is to use a compact AR system that provides both visual and haptic feedback to emulate real operations. The visual feedback includes both virtual objects and operating information to guide the user and thus enable self-learning, as shown in Fig. 1. Furthermore, operating parameters and performance can be quantitatively recorded for skill evaluation, characterization, and analysis. This project will be based on our prior efforts on the vibration-added haptic device and graphic computation to continue to seek virtual-reality training applications for healthcare.

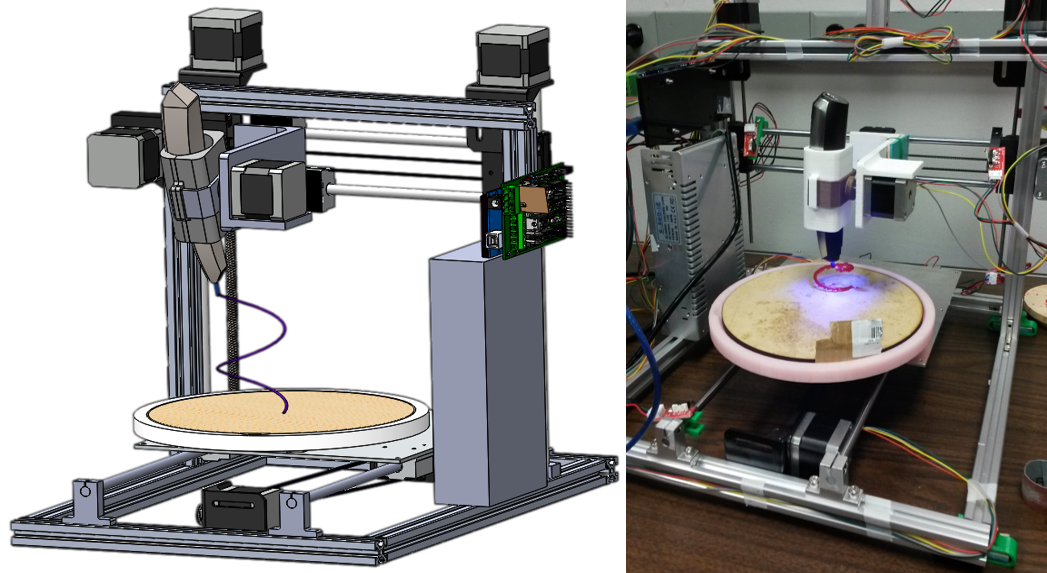

DoodleBot-Interactive Robotic System for Additive Manufacturing of 3D Curve-Networks

Collaborators: None

Program: None

Sponsor: Texas Engineering Experiment Station and the Department of Mechanical Engineering, Texas A&M University

Duration: January 2017 - August 2017

The goal of this project is to design and build a robotic system that allows for interactive printing of curve-networks using eixting off-the shelf 3D printing pens (such as the 3Doodler). The system should be able to automatically take a 3D model of a curve input, compute the tool-path from this model, and print the curve-network from the tool-path. The system should be able to print a wide class of 3D curve-networks that (a) fit within a 1.0ftx1.0ftx1.0ft working volume, (b) can include meshes, knots, or patterns. The system should be modular and reconfigurable, i.e., it should be possible to attach different types of 3D pens (such as Lix etc.) available in the market.

Design of a Vibro-Coupled Haptic Device for Virtual-Reality Surgical Drilling

Collaborators: Bruce Tai - Engineering Technology and Industrial Distribution, Texas A&M University, College Station, TX, Mathew Kuttolamadom - Engineering Technology and Industrial Distribution, Texas A&M University, College Station, TX

Program: AggiE_Challenge 2016-2017

Sponsor: College of Engineering, Texas A&M University

Duration: September 2016 - May 2017

Virtual-reality (VR) simulation has been increasingly used in surgical training particularly for complex and high-risk procedures. A high-fidelity simulator requires detailed 3D environment and realistic haptic feedback. Our grand challenge is to enhance the haptic device with a force-vibration coupled feedback for minimally invasive operations involving power tools, such as drilling and burring. The goals of this project were to design a new component with vibration actuator to retrofit the current commercial haptics systems and to develop algorithms to for the newly integrated systems. Psychophysical studies were performed to determine the limits of human perception to the vibration.